Stromgren Sphere: An example “chalkboard derivation”

(updated for 2013)

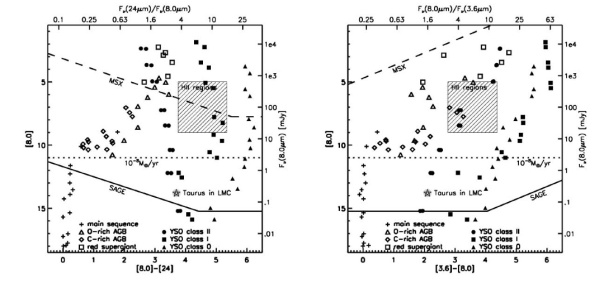

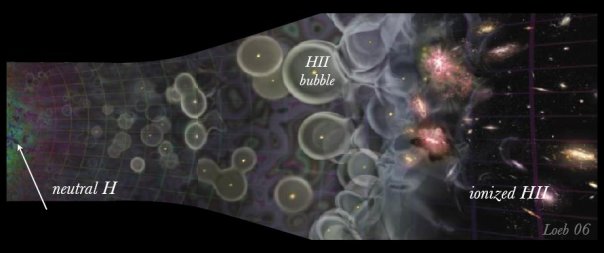

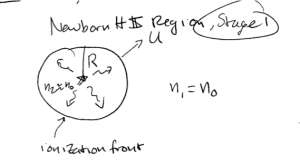

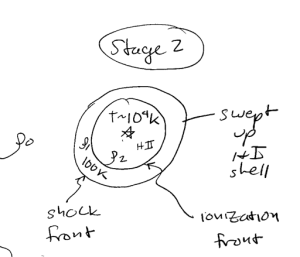

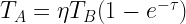

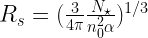

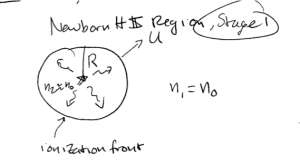

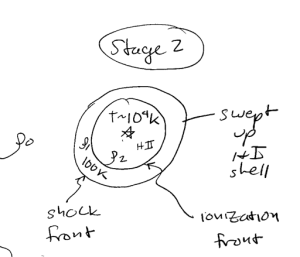

The Stromgren sphere is a simplified analysis of the size of HII regions. Massive O and B stars emit many high-energy photons, which will ionize their surroundings and create HII regions. We assume that such a star is embedded in a uniform medium of neutral hydrogen. A sphere of radius r around this star will become ionized; r is called the “Stromgren radius”. The volume of the ionized region will be such that the rate at which ionized hydrogen recombines equals the rate at which the star emits ionizing photons (i.e. all of the ionizing photons are “used up” re-ionizing hydrogen as it recombines)

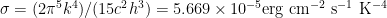

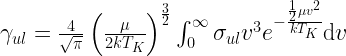

The recombination rate density is  , where

, where  is the recombination coefficient (in

is the recombination coefficient (in  and

and  is the number density (assuming fully ionized gas and only hydrogen, the electron and proton densities are equal). The total rate of ionizing photons (in photons per second) in the volume of the sphere is

is the number density (assuming fully ionized gas and only hydrogen, the electron and proton densities are equal). The total rate of ionizing photons (in photons per second) in the volume of the sphere is  . Setting the rates of ionization and recombination equal to one another, we get

. Setting the rates of ionization and recombination equal to one another, we get

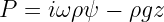

, and solving for r,

, and solving for r,

Typical values for the above variables are  ,

,  and

and  , implying Stromgren radii of 10 to 100 pc. See the journal club (2013) article for discussion of Stromgren’s seminal 1939 paper.

, implying Stromgren radii of 10 to 100 pc. See the journal club (2013) article for discussion of Stromgren’s seminal 1939 paper.

How do we know there is an ISM?

(updated for 2013)

Early astronomers pointed to 3 lines of evidence for the ISM:

- Extinction. The ISM obscures the light from background stars. In 1919, Barnard (JC 2011, 2013) called attention to these “dark markings” on the sky, and put forward the (correct) hypothesis that these were the silhouettes of dark clouds. A good rule of thumb for the amount of extinction present is 1 magnitude of extinction per kpc (for typical, mostly unobscured lines-of-sight).

- Reddening. Even when the ISM doesn’t completely block background starlight, it scatters it. Shorter-wavelength light is preferentially scattered, so stars behind obscuring material appear redder than normal. If a star’s true color is known, its observed color can be used to infer the column density of the ISM between us and the star. Robert Trumpler first used measurements of the apparent “cuspiness” and the brighnesses of star clusters in 1930 to argue for the presence of this effect. Reddening of stars of “known” color is the basis of NICER and related techniques used to map extinction today.

- Stationary Lines. Spectral observations of binary stars show doppler-shifted lines corresponding to the radial velocity of each star. In addition, some of these spectra exhibit stationary (i.e. not doppler-shifted) absorption lines due to stationary material between us and the binary system. Johannes Hartmann first noticed this in 1904 when investigating the spectrum of

Orionis: “The calcium line at

Orionis: “The calcium line at  [angstroms] exhibits a very peculiar behavior. It is distinguished from all the other lines in this spectrum, first by the fact that it always appears extraordinarily week, but almost perfictly sharp… Closer study on this point now led me to the quite surprising result that the calcium line… does not share in the periodic displacements of the lines caused by the orbital motion of the star”

[angstroms] exhibits a very peculiar behavior. It is distinguished from all the other lines in this spectrum, first by the fact that it always appears extraordinarily week, but almost perfictly sharp… Closer study on this point now led me to the quite surprising result that the calcium line… does not share in the periodic displacements of the lines caused by the orbital motion of the star”

Helpful References: Good discussion of the history of extinction and reddening, from Michael Richmond.

A Sense of Scale

(updated for 2013)

How dense (or not) is the ISM?

- Dense cores:

- Typical ISM:

- This room: 1 mol / 22.4L

- XVH (eXtremely High Vacuum) — best human-made vacuum:

- Density of stars in the Milky Way:

In other words, most of the ISM is at a density far below the densities and pressures we can reproduce in the lab. Thus, the details of most of the microphysics in the ISM are still poorly understood. We also see that the density of stars in the Galaxy is quite small – only a few times the average particle density of the ISM.

See also the interstellar cloud properties table and conversions between angular and linear scale.

Density of the Milky Way’s ISM

(updated for 2013)

How do we know that  in the ISM? From the rotation curve of the Milky Way (and some assumptions about the mass ratio of gas to gas+stars+dark matter), we can infer

in the ISM? From the rotation curve of the Milky Way (and some assumptions about the mass ratio of gas to gas+stars+dark matter), we can infer

Maps of HI and CO reveal the extent of our galaxy to be

kpc

kpc

pc (scale height of HI)

pc (scale height of HI)

This applies an approximate volume of

Which, yields a density of

Density of the Intergalactic Medium

(updated for 2013)

From cosmology observations, we know the universe to be very nearly flat ( ). This implies that the mean density of the universe is

). This implies that the mean density of the universe is  .

.

This places an upper limit on the density of the Intergalactic Medium.

Composition of the ISM

(updated for 2013)

- Gas: by mass, gas is 60% Hydrogen, 30% Helium. By number, gas is 88% H, 10% He, and 2% heavier elements

- Dust: The term “dust” applies roughly to any molecule too big to name. The size distribution is biased towards small (0.2

m) particles, with an approximate distribution

m) particles, with an approximate distribution  . The density of dust in the galaxy is

. The density of dust in the galaxy is

- Cosmic Rays: Charged, high-energy (anti)protons, nuclei, electrons, and positrons. Cosmic rays have an energy density of

. The equivalent mass density (using E = mc^2) is

. The equivalent mass density (using E = mc^2) is

- Magnetic Fields: Typical field strengths in the MW are

. This is strong enough to confine cosmic rays.

. This is strong enough to confine cosmic rays.

Bruce Draine’s List of constituents in the ISM:

(updated for 2013)

- Gas

- Dust

- Cosmic Rays*

- Photons**

- B-Field

- Gravitational Field

- Dark Matter

*cosmic rays are highly relativistic, super-energetic ions and electrons

**photons include:

- The Cosmic Microwave Background (2.7 K)

- starlight from stellar photospheres (UV, optical, NIR,…)

from transitions in atoms, ions, and molecules

from transitions in atoms, ions, and molecules- “thermal emission” from dust (heated by starlight, AGN)

- free-free emission (bremsstrahlung) in plasma

- synchrotron radiation from relativistic electrons

-rays from nuclear transitions

-rays from nuclear transitions

His list of “phases” from Table 1.3:

- Coronal gas (Hot Ionized Medium, or “HIM”):

. Shock-heated from supernovae. Fills half the volume of the galaxy, and cools in about 1 Myr.

. Shock-heated from supernovae. Fills half the volume of the galaxy, and cools in about 1 Myr.

- HII gas: Ionized mostly by O and early B stars. Called an “HII region” when confined by a molecular cloud, otherwise called “diffuse HII”.

- Warm HI (Warm Neutral Medium, or “WNM”): atomic,

.

.  . Heated by starlight, photoelectric effect, and cosmic rays. Fills ~40% of the volume.

. Heated by starlight, photoelectric effect, and cosmic rays. Fills ~40% of the volume.

- Cool HI (Cold Neutral Medium, or “CNM”).

. Fills ~1% of the volume.

. Fills ~1% of the volume.

- Diffuse molecular gas. Where HI self-shields from UV radiation to allow

formation on the surfaces of dust grains in cloud interiors. This occurs at

formation on the surfaces of dust grains in cloud interiors. This occurs at  .

.

- Dense Molecular gas. “Bound” according to Draine (though maybe not).

. Sites of star formation. See also Bok Globules (JC 2013).

. Sites of star formation. See also Bok Globules (JC 2013).

- Stellar Outflows.

. Winds from cool stars.

. Winds from cool stars.

These phases are fluid and dynamic, and change on a variety of time and spatial scales. Examples include growth of an HII region, evaporation of molecular clouds, the interface between the ISM and IGM, cooling of supernova remnants, mixing, recombination, etc.

Topology of the ISM

(updated for 2013)

A grab-bag of properties of the Milky Way

- HII scale height: 1 kpc

- CO scale height: 50-75 pc

- HI scale height: 130-400 pc

- Stellar scale height: 100 pc in spiral arm, 500 pc in disk

- Stellar mass:

- Dark matter mass:

- HI mass:

- H2 mass (inferred from CO):

- HII mass:

- -> total gas mass

(including He).

(including He).

- Total MW mass within 15 kpc:

(using the Galaxy’s rotation curve). About 50% dark matter.

(using the Galaxy’s rotation curve). About 50% dark matter.

So the ISM is a relatively small constituent of the Galaxy (by mass).

The Sound Speed

(updated for 2013)

The speed of sound is the speed at which pressure disturbances travel in a medium. It is defined as

,

,

where  and

and  are pressure and mass density, respectively. For a polytropic gas, i.e. one defined by the equation of state

are pressure and mass density, respectively. For a polytropic gas, i.e. one defined by the equation of state  , this becomes

, this becomes  .

.  is the adiabatic index (ratio of specific heats), and

is the adiabatic index (ratio of specific heats), and  describes a monatomic gas.

describes a monatomic gas.

For an isothermal gas where the ideal gas equation of state  holds,

holds,  . Here,

. Here,  is the mean molecular weight (a factor that accounts for the chemical composition of the gas), and

is the mean molecular weight (a factor that accounts for the chemical composition of the gas), and  is the hydrogen atomic mass. Note that for pure molecular hydrogen

is the hydrogen atomic mass. Note that for pure molecular hydrogen  . For molecular gas with ~10% He by mass and trace metals,

. For molecular gas with ~10% He by mass and trace metals,  is often used.

is often used.

A gas can be approximated to be isothermal if the sound wave period is much higher than the (radiative) cooling time of the gas, as any increase in temperature due to compression by the wave will be immediately followed by radiative cooling to the original equilibrium temperature well before the next compression occurs. Many astrophysical situations in the ISM are close to being isothermal, thus the isothermal sound speed is often used. For example, in conditions where temperature and density are independent such as H II regions (where the gas temperature is set by the ionizing star’s spectrum), the gas is very close to isothermal.

Hydrogen “Slang”

(updated for 2013)

Lyman limit: the minimum energy needed to remove an electron from a Hydrogen atom. A “Lyman limit photon” is a photon with at least this energy.

,

,

where  is the Rydberg constant, which has units of

is the Rydberg constant, which has units of  . This energy corresponds to the Lyman limit wavelength as follows:

. This energy corresponds to the Lyman limit wavelength as follows:

.

.

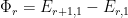

Lyman series: transitions to and from the n=1 energy level of the Bohr atom. The first line in this series was discovered in 1906 using UV studies of electrically excited hydrogen gas.

Balmer series: transitions to and from the n=2 energy level. Discovered in 1885; since these are optical transitions, they were more easily observed than the UV Lyman series transitions.

There are also other named series corresponding to higher n. Examples include Paschen (n=3), Brackett (n=4), and Pfund (n=5). The lowest energy (longest wavelength) transition of a series is designated  , the next lowest energy is

, the next lowest energy is  , and so on. For example, the transition from n=2 to 1 is Lyman alpha, or

, and so on. For example, the transition from n=2 to 1 is Lyman alpha, or  , while the transition from n=7 to 4 is Brackett gamma, or

, while the transition from n=7 to 4 is Brackett gamma, or  . The wavelength of a given transition can be computed via the Rydberg equation:

. The wavelength of a given transition can be computed via the Rydberg equation:

,

,

where  and

and  are the initial and final energy levels of the electron, respectively. See this handout for a pictorial representation of the low n transitions in hydrogen. Note that the Lyman (or Balmer, Paschen, etc.) limit can be computed by inserting

are the initial and final energy levels of the electron, respectively. See this handout for a pictorial representation of the low n transitions in hydrogen. Note that the Lyman (or Balmer, Paschen, etc.) limit can be computed by inserting  in the above equation.

in the above equation.

The Lyman continuum corresponds to the region of the spectrum near the Lyman limit, where the spacing between energy levels becomes comparable to spectral line widths and so individual lines are no longer distinguishable. Such continua exist for each series of lines.

Chemistry

(updated for 2013)

See Draine Table 1.4 for elemental abundances for the Sun (and thus presumably for the ISM near the Sun).

By number:  ;

;

by mass:  .

.

However, these ratios vary by position in the galaxy, especially for heavier elements (which depend on stellar processing). For example, the abundance of heavy elements (Z ≥ 6, i.e. carbon and heavier) is twice as low at the sun’s position than in the Galactic center. Even though metals account for only 1% of the mass, they dominate most of the important chemistry, ionization, and heating/cooling processes. They are essential for star formation, as they allow molecular clouds to cool and collapse.

Dissociating molecules takes less energy than ionizing atoms, in general. For example:

(UV transition)

(UV transition)

,

,

where  and

and  are the ionization and dissociation energies, respectively. We can see that it is much easier to dissociate molecular hydrogen than to ionize atomic hydrogen; in other words, atomic H will survive a harsher radiation field than molecular H. The above numbers thus set the structure of molecular clouds in the interstellar radiation field; a large amount of molecular gas needs to gather together in order to allow it to survive via the process of self-shielding, in which a thick enough column of gas exists such that at some distance below the surface of the cloud all of the energetic photons have already been absorbed. Note that the high dissociation energy of CO is a result of the triple bond between the carbon and oxygen atoms. CO is a very important coolant in molecular clouds.

are the ionization and dissociation energies, respectively. We can see that it is much easier to dissociate molecular hydrogen than to ionize atomic hydrogen; in other words, atomic H will survive a harsher radiation field than molecular H. The above numbers thus set the structure of molecular clouds in the interstellar radiation field; a large amount of molecular gas needs to gather together in order to allow it to survive via the process of self-shielding, in which a thick enough column of gas exists such that at some distance below the surface of the cloud all of the energetic photons have already been absorbed. Note that the high dissociation energy of CO is a result of the triple bond between the carbon and oxygen atoms. CO is a very important coolant in molecular clouds.

Measuring States in the ISM

(updated for 2013)

There are two primary observational diagnostics of the thermal, chemical, and ionization states in the ISM:

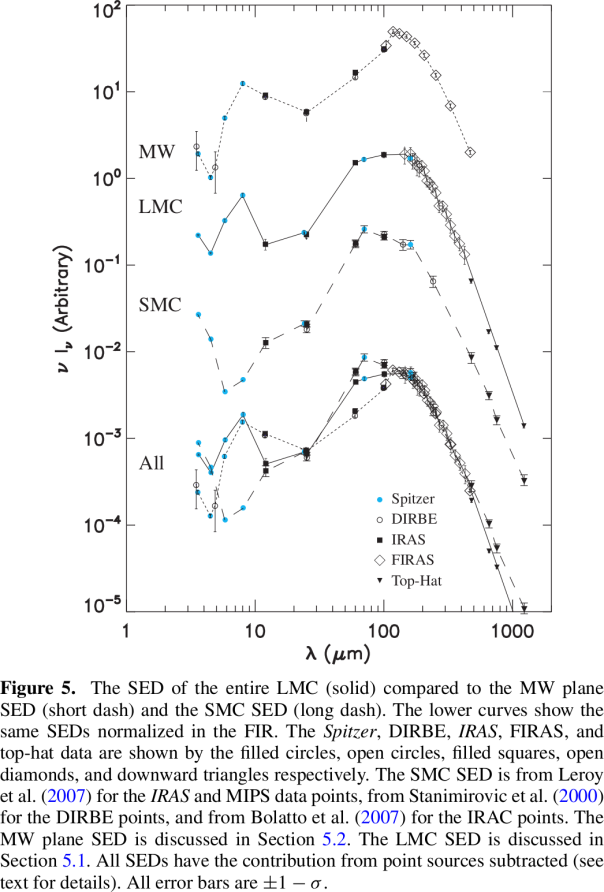

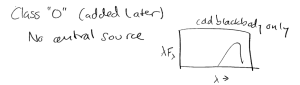

- Spectral Energy Distribution (SED; broadband low-resolution)

- Spectrum (narrowband, high-resolution)

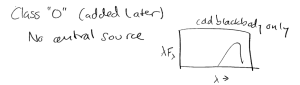

SEDs

Very generally, if a source’s SED is blackbody-like, one can fit a Planck function to the SED and derive the temperature and column density (if one can assume LTE). If an SED is not blackbody-like, the emission is the sum of various processes, including:

- thermal emission (e.g. dust, CMB)

- synchrotron emission (power law spectrum)

- free-free emission (thermal for a thermal electron distribution)

Spectra

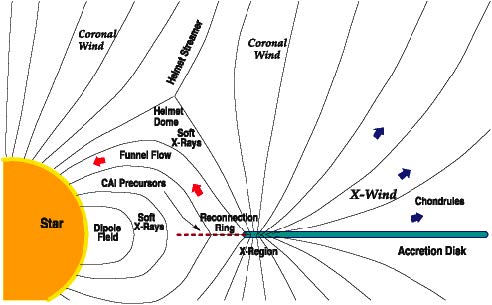

Quantum mechanics combined with chemistry can predict line strengths. Ratios of lines can be used to model “excitation”, i.e. what physical conditions (density, temperature, radiation field, ionization fraction, etc.) lead to the observed distribution of line strengths. Excitation is controlled by

- collisions between particles (LTE often assumed, but not always true)

- photons from the interstellar radiation field, nearby stars, shocks, CMB, chemistry, cosmic rays

- recombination/ionization/dissociation

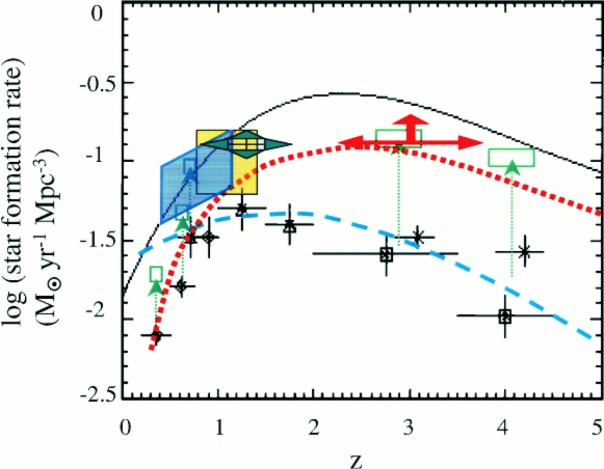

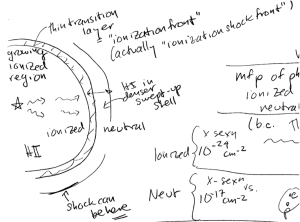

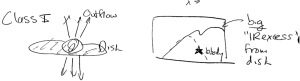

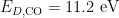

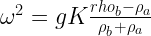

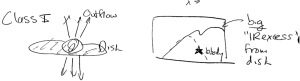

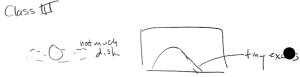

Which of these processes matter where? In class (2011), we drew the following schematic.

A schematic of several structures in the ISM

Key

A: Dense molecular cloud with stars forming within

(measured, e.g., from line ratios)

(measured, e.g., from line ratios)- gas is mostly molecular (low T, high n, self-shielding from UV photons, few shocks)

- not much photoionization due to high extinction (but could be complicated ionization structure due to patchy extinction)

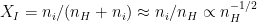

- cosmic rays can penetrate, leading to fractional ionization:

, where

, where  is the ion density (see Draine 16.5 for details). Measured values for

is the ion density (see Draine 16.5 for details). Measured values for  (the electron-to-neutral ratio, which is presumed equal to the ionization fraction) are about

(the electron-to-neutral ratio, which is presumed equal to the ionization fraction) are about  .

.

- possible shocks due to impinging HII region – could raise T, n, ionization, and change chemistry globally

- shocks due to embedded young stars w/ outflows and winds -> local changes in T, n, ionization, chemistry

- time evolution? feedback from stars formed within?

B: Cluster of OB stars (an HII region ionized by their integrated radiation)

- 7000 < T < 10,000 K (from line ratios)

- gas primarily ionized due to photons beyond Lyman limit (E > 13.6 eV) produced by O stars

- elements other than H have different ionization energy, so will ionize more or less easily

- HII regions are often clumpy; this is observed as a deficit in the average value of

from continuum radiation over the entire region as compared to the value of ne derived from line ratios. In other words, certain regions are denser (in ionized gas) than others.

from continuum radiation over the entire region as compared to the value of ne derived from line ratios. In other words, certain regions are denser (in ionized gas) than others.

- The above introduces the idea of a filling factor, defined as the ratio of filled volume to total volume (in this case the filled volume is that of ionized gas)

- dust is present in HII regions (as evidenced by observations of scattered light), though the smaller grains may be destroyed

- significant radio emission: free-free (bremsstrahlung), synchrotron, and recombination line (e.g. H76a)

- chemistry is highly dependent on n, T, flux, and time

C: Supernova remnant

- gas can be ionized in shocks by collisions (high velocities required to produce high energy collisions, high T)

- e.g. if v > 1000 km/s, T > 106 K

- atom-electron collisions will ionize H, He; produce x-rays; produce highly ionized heavy elements

- gas can also be excited (e.g. vibrational H2 emission) and dissociated by shocks

D: General diffuse ISM

- UV radiation from the interstellar radiation field produces ionization

- ne best measured from pulsar dispersion measure (DM), an observable.

- role of magnetic fields depends critically on XI, n (B-fields do not directly affect neutrals, though their effects can be felt through ion-neutral collisions)

Energy Density Comparison

(updated for 2013)

See Draine table 1.5. The primary sources of energy present in the ISM are:

- The CMB (

- Thermal IR from dust

- Starlight (

- Thermal kinetic energy (3/2 nkT)

- Turbulent kinetic energy (

)

)

- Magnetic fields (

)

)

- Cosmic rays

All of these terms have energy densities within an order of magnitude of  . With the exception of the CMB, this is not a coincidence: because of the dynamic nature of the ISM, these processes are coupled together and thus exchange energy with one another.

. With the exception of the CMB, this is not a coincidence: because of the dynamic nature of the ISM, these processes are coupled together and thus exchange energy with one another.

Relevant Velocities in the ISM

(updated for 2013)

Note: it’s handy to remember that 1 km/s ~ 1 pc / Myr.

- Galactic rotation: 18 km/s/kpc (e.g. 180 km/s at 10 kpc)

- Isothermal sound speed:

- For H, this speed is 0.3, 1, and 3 km/s at 10 K, 100 K, and 1000 K, respectively.

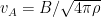

- Alfvén speed: The speed at which magnetic fluctuations propagate.

Alfvén waves are transverse waves along the direction of the magnetic field.

Alfvén waves are transverse waves along the direction of the magnetic field.

- Note that

if

if  , which is observed to be true over a large portion of the ISM.

, which is observed to be true over a large portion of the ISM.

- Interstellar B-fields can be measured using the Zeeman effect. Observed values range from

in the diffuse ISM to

in the diffuse ISM to  in dense clouds. For specific conditions:

in dense clouds. For specific conditions:

- Compare to the isothermal sound speed, which is 0.3 km/s in dense gas at 20 K.

in dense gas

in dense gas in diffuse gas

in diffuse gas

- Observed velocity dispersion in molecular gas is typically about 1 km/s, and is thus supersonic. This is a signature of the presence of turbulence. (see the summary of Larson’s seminal 1981 paper)

Introductory remarks on Radiative Processes and Equilibrium

(updated for 2013)

The goal of the next several sections is to build an understanding of how photons are produced by, are absorbed by, and interact with the ISM. We consider a system in which one or more constituents are excited under certain physical conditions to produce photons, then the photons pass through other constituents under other conditions, before finally being observed (and thus affected by the limitations and biases of the observational conditions and instruments) on Earth. Local thermodynamic equilibrium is often used to describe the conditions, but this does not always hold. Remember that our overall goal is to turn observations of the ISM into physics, and vice-versa.

The following contribute to an observed Spectral Energy Distribution:

- gas: spontaneous emission, stimulated emission (e.g. masers), absorption, scattering processes involving photons + electrons or bound atoms/molecules

- dust: absorption; scattering (the sum of these two -> extinction); emission (blackbody modified by wavelength-dependent emissivity)

- other: synchrotron, brehmsstrahlung, etc.

The processes taking place in our “system” depend sensitively on the specific conditions of the ISM in question, but the following “rules of thumb” are worth remembering:

- Very rarely is a system actually in a true equilibrium state.

- Except in HII regions, transitions in the ISM are usually not electronic.

- The terms Upper Level and Lower Level refer to any two quantum mechanical states of an atom or molecule where

. We will use k to index the upper state, and j for the lower state.

. We will use k to index the upper state, and j for the lower state.

- Transitions can be induced by photons, cosmic rays, collisions with atoms and molecules, and interactions with free electrons.

- Levels can refer to electronic, rotational, vibrational, spin, and magnetic states.

- To understand radiative processes in the ISM, we will generally need to know the chemical composition, ambient radiation field, and velocity distribution of each ISM component. We will almost always have to make simplifying assumptions about these conditions.

Thermodynamic Equilibrium

(updated for 2013)

Collisions and radiation generally compete to establish the relative populations of different energy states. Randomized collisional processes push the distribution of energy states to the Boltzmann distribution,  . When collisions dominate over competing processes and establish the Boltzmann distribution, we say the ISM is in Thermodynamic Equilibrium.

. When collisions dominate over competing processes and establish the Boltzmann distribution, we say the ISM is in Thermodynamic Equilibrium.

Often this only holds locally, hence the term Local Thermodynamic Equilibrium or LTE. For example, the fact that we can observe stars implies that energy (via photons) is escaping the system. While this cannot be considered a state of global thermodynamic equilibrium, localized regions in stellar interiors are in near-equilibrium with their surroundings.

But the ISM is not like stars. In stars, most emission, absorption, scattering, and collision processes occur on timescales very short compared with dynamical or evolutionary timescales. Due to the low density of the ISM, interactions are much more rare. This makes it difficult to establish equilibrium. Furthermore, many additional processes disrupt equilibrium (such as energy input from hot stars, cosmic rays, X-ray background, shocks).

As a consequence, in the ISM the level populations in atoms and molecules are not always in their equilibrium distribution. Because of the low density, most photons are created from (rare) collisional processes (except in locations like HII regions where ionization and recombination become dominant).

Spitzer Notation

(updated for 2013)

We will use the notation from Spitzer (1978). See also Draine, Ch. 3. We represent the density of a state j as

, where

, where

- n: particle density

- j: quantum state

- X: element

- (r): ionization state

- For example,

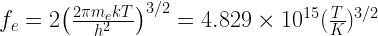

In his book, Spitzer defines something called “Equivalent Thermodynamic Equilibrium” or “ETE”. In ETE,  gives the “equivalent” density in state j. The true (observed) value is

gives the “equivalent” density in state j. The true (observed) value is  . He then defines the ratio of the true density to the ETE density to be

. He then defines the ratio of the true density to the ETE density to be

.

.

This quantity approaches 1 when collisions dominate over ionization and recombination. For LTE,  for all levels. The level population is then given by the Boltzmann equation:

for all levels. The level population is then given by the Boltzmann equation:

,

,

where  and

and  are the energy and statistical weight (degeneracy) of level j, ionization state r. The exponential term is called the “Boltzmann factor”‘ and determines the relative probability for a state.

are the energy and statistical weight (degeneracy) of level j, ionization state r. The exponential term is called the “Boltzmann factor”‘ and determines the relative probability for a state.

The term “Maxwellian” describes the velocity distribution of a 3-D gas. “Maxwell-Boltzmann” is a special case of the Boltzmann distribution for velocities.

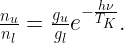

Using our definition of b and dropping the “r” designation,

Where  is the frequency of the radiative transition from k to j. We will use the convention that

is the frequency of the radiative transition from k to j. We will use the convention that  , such that

, such that  .

.

To find the fraction of atoms of species  excited to level j, define:

excited to level j, define:

as the particle density of  in all states. Then

in all states. Then

Define  , the “partition function” for species

, the “partition function” for species  , to be the denominator of the RHS of the above equation. Then we can write, more simply:

, to be the denominator of the RHS of the above equation. Then we can write, more simply:

to be the fraction of particles that are in state j. By computing this for all j we now know the distribution of level populations for ETE.

The Saha Equation

(updated for 2013)

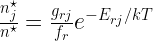

How do we deal with the distribution over different states of ionization r? In thermodynamic equilibrium, the Saha equation gives:

,

,

where  and

and  are the partition functions as discussed in the previous section. The partition function for electrons is given by

are the partition functions as discussed in the previous section. The partition function for electrons is given by

For a derivation of this, see pages 103-104 of this handout from Bowers and Deeming.

If  and

and  are approximated by the first terms in their sums (i.e. if the ground state dominates their level populations), then

are approximated by the first terms in their sums (i.e. if the ground state dominates their level populations), then

,

,

where  is the energy required to ionize

is the energy required to ionize  from the ground (j = 1) level. Ultimately, this is just a function of

from the ground (j = 1) level. Ultimately, this is just a function of  and

and  . This assumes that the only relevant ionization process is via thermal collision (i.e. shocks, strong ionizing sources, etc. are ignored).

. This assumes that the only relevant ionization process is via thermal collision (i.e. shocks, strong ionizing sources, etc. are ignored).

Important Properties of Local Thermodynamic Equilibrium

(updated for 2013)

For actual local thermodynamic equilbrium (not ETE), the following are important to keep in mind:

- Detailed balance: transition rate from j to k = rate from k to j (i.e. no net change in particle distribution)

- LTE is equivalent to ETE when

or

or

- LTE is only an approximation, good under specific conditions.

- Radiation intensity produced is not blackbody illumination as you’d want for true thermodynamic equilibrium.

- Radiation is usually much weaker than the Planck function, which means not all levels are populated.

- LTE assumption does not mean the Saha equation is applicable since radiative processes (not collisions) dominate in many ISM cases where LTE is applicable.

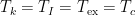

Definitions of Temperature

(updated for 2013)

The term “temperature” describes several different quantities in the ISM, and in observational astronomy. Only under idealized conditions (i.e. thermodynamic equilibrium, the Rayleigh Jeans regime, etc.) are (some of) these temperatures equivalent. For example, in stellar interiors, where the plasma is very well-coupled, a single “temperature” defines each of the following: the velocity distribution, the ionization distribution, the spectrum, and the level populations. In the ISM each of these can be characterized by a different “temperature!”

Brightness Temperature

the temperature of a blackbody that reproduces a given flux density at a specific frequency, such that

the temperature of a blackbody that reproduces a given flux density at a specific frequency, such that

Note: units for  are

are  .

.

This is a fundamental concept in radio astronomy. Note that the above definition assumes that the index of refraction in the medium is exactly 1.

Effective Temperature

(also called

(also called  , the radiation temperature) is defined by

, the radiation temperature) is defined by

,

,

which is the integrated intensity of a blackbody of temperature  .

.  is the Stefan-Boltzmann constant.

is the Stefan-Boltzmann constant.

Color Temperature

is defined by the slope (in log-log space) of an SED. Thus

is defined by the slope (in log-log space) of an SED. Thus  is the temperature of a blackbody that has the same ratio of fluxes at two wavelengths as a given measurement. Note that

is the temperature of a blackbody that has the same ratio of fluxes at two wavelengths as a given measurement. Note that  for a perfect blackbody.

for a perfect blackbody.

Kinetic Temperature

is the temperature that a particle of gas would have if its Maxwell-Boltzmann velocity distribution reproduced the width of a given line profile. It characterizes the random velocity of particles. For a purely thermal gas, the line profile is given by

is the temperature that a particle of gas would have if its Maxwell-Boltzmann velocity distribution reproduced the width of a given line profile. It characterizes the random velocity of particles. For a purely thermal gas, the line profile is given by

,

,

where  in frequency units, or

in frequency units, or

in velocity units.

in velocity units.

In the “hot” ISM  is characteristic, but when

is characteristic, but when  (where

(where  are the Doppler full widths at half-maxima [FWHM]) then

are the Doppler full widths at half-maxima [FWHM]) then  does not represent the random velocity distribution. Examples include regions dominated by turbulence.

does not represent the random velocity distribution. Examples include regions dominated by turbulence.

can be different for neutrals, ions, and electrons because each can have a different Maxwellian distribution. For electrons,

can be different for neutrals, ions, and electrons because each can have a different Maxwellian distribution. For electrons,  , the electron temperature.

, the electron temperature.

Ionization Temperature

is the temperature which, when plugged into the Saha equation, gives the observed ratio of ionization states.

is the temperature which, when plugged into the Saha equation, gives the observed ratio of ionization states.

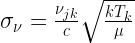

Excitation Temperature

is the temperature which, when plugged into the Boltzmann distribution, gives the observed ratio of two energy states. Thus it is defined by

is the temperature which, when plugged into the Boltzmann distribution, gives the observed ratio of two energy states. Thus it is defined by

.

.

Note that in stellar interiors,  . In this room,

. In this room,  , but

, but  .

.

Spin Temperature

is a special case of

is a special case of  for spin-flip transitions. We’ll return to this when we discuss the important 21-cm line of neutral hydrogen.

for spin-flip transitions. We’ll return to this when we discuss the important 21-cm line of neutral hydrogen.

Bolometric temperature

is the temperature of a blackbody having the same mean frequency as the observed continuum spectrum. For a blackbody,

is the temperature of a blackbody having the same mean frequency as the observed continuum spectrum. For a blackbody,  . This is a useful quantity for young stellar objects (YSOs), which are often heavily obscured in the optical and have infrared excesses due to the presence of a circumstellar disk.

. This is a useful quantity for young stellar objects (YSOs), which are often heavily obscured in the optical and have infrared excesses due to the presence of a circumstellar disk.

Antenna temperature

is a directly measured quantity (commonly used in radio astronomy) that incorporates radiative transfer and possible losses between the source emitting the radiation and the detector. In the simplest case,

is a directly measured quantity (commonly used in radio astronomy) that incorporates radiative transfer and possible losses between the source emitting the radiation and the detector. In the simplest case,

,

,

where  is the telescope efficiency (a numerical factor from 0 to 1) and

is the telescope efficiency (a numerical factor from 0 to 1) and  is the optical depth.

is the optical depth.

Excitation Processes: Collisions

(updated for 2013)

Collisional coupling means that the gas can be treated in the fluid approximation, i.e. we can treat the system on a macrophysical level.

Collisions are of key importance in the ISM:

- cause most of the excitation

- can cause recombinations (electron + ion)

- lead to chemical reactions

Three types of collisions

- Coulomb force-dominated (

potential): electron-ion, electron-electron, ion-ion

potential): electron-ion, electron-electron, ion-ion

- Ion-neutral: induced dipole in neutral atom leads to

potential; e.g. electron-neutral scattering

potential; e.g. electron-neutral scattering

- neutral-neutral: van der Waals forces ->

potential; very low cross-section

potential; very low cross-section

We will discuss (3) and (2) below; for ion-electron and ion-ion collisions, see Draine Ch. 2.

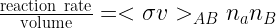

In general, we will parametrize the interaction rate between two bodies A and B as follows:

In this equation,  is the collision rate coefficient in

is the collision rate coefficient in  , where

, where  is the velocity-dependent cross section and

is the velocity-dependent cross section and  is the particle velocity distribution, i.e. the probability that the relative speed between A and B is v. For the Maxwellian velocity distribution,

is the particle velocity distribution, i.e. the probability that the relative speed between A and B is v. For the Maxwellian velocity distribution,

,

,

where  is the reduced mass. The center of mass energy is

is the reduced mass. The center of mass energy is  , and the distribution can just as well be written in terms of the energy distribution of particles,

, and the distribution can just as well be written in terms of the energy distribution of particles,  . Since

. Since  , we can rewrite the collision rate coefficient in terms of energy as

, we can rewrite the collision rate coefficient in terms of energy as

.

.

These collision coefficients can occasionally be calculated analytically (via classical or quantum mechanics), and can in other situations be measured in the lab. The collision coefficients often depend on temperature. For practical purposes, many databases tabulate collision rates for different molecules and temperatures (e.g., the LAMBDA databsase).

For more details, see Draine, Chapter 2. In particular, he discusses 3-body collisions relevant at high densities.

Neutral-Neutral Interactions

(updated for 2013)

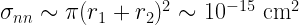

Short range forces involving “neutral” particles (neutral-ion, neutral-neutral) are inherently quantum-mechanical. Neutral-neutral interactions are very weak until electron clouds overlap ( cm). We can therefore treat these particles as hard spheres. The collisional cross section for two species is a circle of radius r1 + r2, since that is the closest two particles can get without touching.

cm). We can therefore treat these particles as hard spheres. The collisional cross section for two species is a circle of radius r1 + r2, since that is the closest two particles can get without touching.

What does that collision rate imply? Consider the mean free path:

This is about 100 AU in typical ISM conditions ( )

)

In gas at temperature T, the mean particle velocity is given by the 3-d kinetic energy:  , or

, or

, where

, where  is the mass of the neutral particle. The mean free path and velocity allows us to define a collision timescale:

is the mass of the neutral particle. The mean free path and velocity allows us to define a collision timescale:

.

.

- For (n,T) = (

), the collision time is 500 years

), the collision time is 500 years

- For (n,T) = (

), the collision time is 1.7 months

), the collision time is 1.7 months

- For (n,T) = (

), the collision time is 45 years

), the collision time is 45 years

So we see that density matters much more than temperature in determining the frequency of neutral-neutral collisions.

Ion-Neutral Reactions

(updated for 2013)

In Ion-Neutral reactions, the neutral atom is polarized by the electric field of the ion, so that interaction potential is

,

,

where  is the electric field due to the charged particle,

is the electric field due to the charged particle,  is the induced dipole moment in the neutral particle (determined by quantum mechanics), and

is the induced dipole moment in the neutral particle (determined by quantum mechanics), and  is the polarizability, which defines

is the polarizability, which defines  for a neutral atom in a uniform static electric field. See Draine, section 2.4 for more details.

for a neutral atom in a uniform static electric field. See Draine, section 2.4 for more details.

This interaction can take strong or weak forms. We distinguish between the two cases by considering b, the impact parameter. Recall that the reduced mass of a 2-body system is  In the weak regime, the interaction energy is much smaller than the kinetic energy of the reduced mass:

In the weak regime, the interaction energy is much smaller than the kinetic energy of the reduced mass:

.

.

In the strong regime, the opposite holds:

.

.

The spatial scale which separates these two regimes corresponds to  , the critical impact parameter. Setting the two sides equal, we see that

, the critical impact parameter. Setting the two sides equal, we see that

The effective cross section for ion-neutral interactions is

Deriving an interaction rate is tricker than for neutral-neutral collisions because  in general. So, let’s leave out an explicit n and calculate a rate coefficient k instead, in

in general. So, let’s leave out an explicit n and calculate a rate coefficient k instead, in  .

.

(although really

(although really  , so k is largely independent of v). Combining with the equation above, we get the ion-neutral scattering rate coefficient

, so k is largely independent of v). Combining with the equation above, we get the ion-neutral scattering rate coefficient

As an example, for  interactions we get

interactions we get  . This is about the rate for most ion-neutral exothermic reactions. This gives us

. This is about the rate for most ion-neutral exothermic reactions. This gives us

.

.

So, if  , the average time

, the average time  between collisions is 16 years. Recall that, for neutral-neutral collisions in the diffuse ISM, we had

between collisions is 16 years. Recall that, for neutral-neutral collisions in the diffuse ISM, we had  years. Ion-neutral collisions are much more frequent in most parts of the ISM due to the larger interaction cross section.

years. Ion-neutral collisions are much more frequent in most parts of the ISM due to the larger interaction cross section.

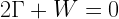

The Virial Theorem

(Transcribed by Bence Beky). See also these slides from lecture

See Draine pp 395-396 and appendix J for more details.

The Virial Theorem provides insight about how a volume of gas subject to many forces will evolve. Lets start with virial equilibrium. For a surface S,

see Spitzer pp.~217–218. Here I is the moment of inertia:

is the bulk kinetic energy of the fluid (macroscopic kinetic energy):

is the bulk kinetic energy of the fluid (macroscopic kinetic energy):

is

is  of the random kinetic energy of thermal particles (molecular motion), or

of the random kinetic energy of thermal particles (molecular motion), or  of random kinetic energy of relativistic particles (microscopic kinetic energy):

of random kinetic energy of relativistic particles (microscopic kinetic energy):

is the magnetic energy within S:

is the magnetic energy within S:

and W is the total gravitational energy of the system if masses outside S don’t contribute to the potential:

Among all these terms, the most used ones are  ,

,  and

and  . But most often the equation is just quoted as

. But most often the equation is just quoted as  . Note that the virial theorem always holds, inapplicability is only a problem when important terms are omitted.

. Note that the virial theorem always holds, inapplicability is only a problem when important terms are omitted.

This kind of simple analysis is often used to determine how bound a system is, and predict its future, e.g. collapse, expansion or evaporation. Specific examples will show up later in the course, including instability analyses.

The virial theorem as Chandrasekhar and Fermi formulated it in 1953 is the following:

This uses a different notation but expresses the same idea, which is very useful in terms of the ISM.

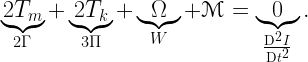

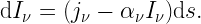

Radiative Transfer

The specific intensity of a radiation field is defined as the energy rate density with respect to frequency, cross sectional area, solid angle and time:

![[\mathrm dI_\nu] = 1 \;\mathrm{erg} \; \mathrm {Hz}^{-1} \; \mathrm {cm}^{-2} \; \mathrm {sr}^{-1} \; \mathrm s ^{-1}](https://s0.wp.com/latex.php?latex=%5B%5Cmathrm+dI_%5Cnu%5D+%3D+1+%5C%3B%5Cmathrm%7Berg%7D+%5C%3B+%5Cmathrm+%7BHz%7D%5E%7B-1%7D+%5C%3B+%5Cmathrm+%7Bcm%7D%5E%7B-2%7D+%5C%3B+%5Cmathrm+%7Bsr%7D%5E%7B-1%7D+%5C%3B+%5Cmathrm+s+%5E%7B-1%7D+&bg=FFFFFF&fg=000000&s=2&c=20201002)

in cgs units. It is important to note that specific intensity does not change during the propagation of a ray, no matter what its geometry is, unless there is extinction or emission in the path.

Specific intensity is a function of position, frequency, direction and time. Integrating over all directions, we get the specific flux density, which is a function of position, frequency and time:

![[F_\nu] = 1 \;\mathrm{erg} \; \mathrm {Hz}^{-1} \; \mathrm {cm}^{-2} \; \mathrm s ^{-1}](https://s0.wp.com/latex.php?latex=%5BF_%5Cnu%5D+%3D+1+%5C%3B%5Cmathrm%7Berg%7D+%5C%3B+%5Cmathrm+%7BHz%7D%5E%7B-1%7D+%5C%3B+%5Cmathrm+%7Bcm%7D%5E%7B-2%7D+%5C%3B+%5Cmathrm+s+%5E%7B-1%7D&bg=FFFFFF&fg=000000&s=2&c=20201002)

where  is usually assumed to be zero.

is usually assumed to be zero.

A conventional unit of specific flux density, especially in radioastronomy, is jansky, named after the American radio astronomer Karl Guthe Jansky:

The specific energy density of a radiation field is

![[u_\nu] = 1 \;\mathrm{erg} \; \mathrm {Hz}^{-1} \; \mathrm {cm}^{-3}](https://s0.wp.com/latex.php?latex=%5Bu_%5Cnu%5D+%3D+1+%5C%3B%5Cmathrm%7Berg%7D+%5C%3B+%5Cmathrm+%7BHz%7D%5E%7B-1%7D+%5C%3B+%5Cmathrm+%7Bcm%7D%5E%7B-3%7D&bg=FFFFFF&fg=000000&s=2&c=20201002)

The mean specific intensity is the specific intensity at a given position and time averaged over all directions:

![[J_\nu] = 1 \;\mathrm{erg} \; \mathrm {Hz}^{-1} \; \mathrm {cm}^{-2} \; \mathrm {s}^{-1}](https://s0.wp.com/latex.php?latex=%5BJ_%5Cnu%5D+%3D+1+%5C%3B%5Cmathrm%7Berg%7D+%5C%3B+%5Cmathrm+%7BHz%7D%5E%7B-1%7D+%5C%3B+%5Cmathrm+%7Bcm%7D%5E%7B-2%7D+%5C%3B+%5Cmathrm+%7Bs%7D%5E%7B-1%7D&bg=FFFFFF&fg=000000&s=2&c=20201002)

The above “specific” quantities all have their frequency integrated counterparts: intensity, flux density, energy density and mean intensity.

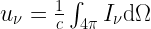

The emission and absorption coefficients  and

and  are defined as the coefficients in the differential equations governing the change of intensity in a ray transversing some medium:

are defined as the coefficients in the differential equations governing the change of intensity in a ray transversing some medium:

The emissivity  and opacity

and opacity  are defined by

are defined by

To find the integral equation determining the specific intensity as a function of path, we define the source function  and the differential optical depth

and the differential optical depth  by

by

;

;

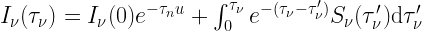

It is left as an exercise to the reader that these lead to the Radiative Transfer Equation

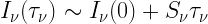

If the source function is constant in a medium, then the two limiting cases are

- optically thin

:

:

- optically thick

:

:

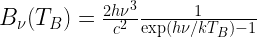

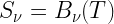

The Planck function, named after the German physicist Max Karl Ernst Ludwig Planck, is

Planck’s law says that a black body, that is, an optically thick object in thermal equilibrium, will emit radiation of specific intensity given by the Planck function, also known as the blackbody function. Any radiation with this specific intensity is called blackbody radiation. A similar concept is thermal emission, which in fact is defined as  , therefore only implies blackbody radiation if the emitting medium is optically thick. Note that in case of thermal emission, one can substitute the definition of source function to write

, therefore only implies blackbody radiation if the emitting medium is optically thick. Note that in case of thermal emission, one can substitute the definition of source function to write  , which is known as Kirchoff’s law.

, which is known as Kirchoff’s law.

The brightness temperature  of a radiation of specific intensity

of a radiation of specific intensity  at a given frequency

at a given frequency  is defined as the temperature that a black body would have to have in order to have the same specific intensity:

is defined as the temperature that a black body would have to have in order to have the same specific intensity:

There are two asymptotic approximations of the blackbody radiation: if  , that is, small frequency or high temperature, we have the Rayleigh–Jeans approximation for the specific intensity:

, that is, small frequency or high temperature, we have the Rayleigh–Jeans approximation for the specific intensity:

This is valid in most areas of radio astronomy, except for example some cases of thermal dust emission.

In the other limit, where  , that is, large frequency or low temperature, we have the Wien approximation, named after the German physicist Wilhelm Carl Werner Otto Fritz Franz Wien who derived it in 1983:

, that is, large frequency or low temperature, we have the Wien approximation, named after the German physicist Wilhelm Carl Werner Otto Fritz Franz Wien who derived it in 1983:

The brightness temperature  of a radiation of specific intensity

of a radiation of specific intensity  at a given frequency

at a given frequency  is defined as the temperature that a black body would have to have in order to have the same specific intensity:

is defined as the temperature that a black body would have to have in order to have the same specific intensity:

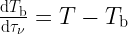

Now assume the background radiation of brightness temperature  traverses a medium of temperature T, source function

traverses a medium of temperature T, source function  , and optical depth

, and optical depth  . In the Rayleigh–Jeans regime

. In the Rayleigh–Jeans regime  , therefore the differential and integral forms of the radiative transfer equation become

, therefore the differential and integral forms of the radiative transfer equation become

where  is the brightness temperature of the radiation leaving the medium.

is the brightness temperature of the radiation leaving the medium.

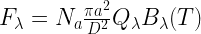

This can be applied to dust emission observations assuming an “emissivity-modified” blackbody radiation. Then the contribution of particles of a given linear size a is

where  is the number of such particles,

is the number of such particles,  is the distace to the observer, and

is the distace to the observer, and  is the emissivity. Recall that the blackbody intensity with respect to wavelength is

is the emissivity. Recall that the blackbody intensity with respect to wavelength is

We refer the reader to Hilebrand 1983.

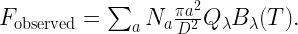

It turns out that the emissivity in far infrared follows a power law:

for blackbody

for blackbody for amorphous lattice-layer materials

for amorphous lattice-layer materials for metals and crystalline dielectrics}

for metals and crystalline dielectrics}

The observed flux will be

The same emission can be a result of different values of T, Q, and N.

To determine the total mass of dust, we write

where  is an average weighted appropriately.

is an average weighted appropriately.

If  , then emission essentially depends on grain surface area, and we can write

, then emission essentially depends on grain surface area, and we can write

Hildebrand 1983 gives us an empirical formula for dust opacity, implicitly assuming a gas-to-dust ratio of 100, which can be extended with the power law described above to arrive at

A typical modern value for interstellar dust is  , but it is highly disputed. It can be determined based on the SED slope in the Rayleigh–Jeans regime, where

, but it is highly disputed. It can be determined based on the SED slope in the Rayleigh–Jeans regime, where

ISM of the Milky Way

The ISM in the Milky way can be divided into cold stuff and hot stuff. Cold stuff are dust and gas. Katherine will talk briefly about the importance of cooling via CO emission. Hot stuff will be discussed by Ragnhild and Vicente (also see Spitzer 1958), and Tanmoy already talked about supernovae. Suggested reading is Chapters 5 and 19 of Draine. In particular, if you ever need a reference of term symbols (uppercase Greek letters with subscripts and superscripts), see page 39.

Cold ISM

The molecular gas is mostly composed of  molecules. This, however, rarely can be observed directly: it has no dipole moment, therefore no rovibrational energy levels. (As exceptions, UV absorption lines can be observed in hot

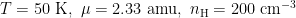

molecules. This, however, rarely can be observed directly: it has no dipole moment, therefore no rovibrational energy levels. (As exceptions, UV absorption lines can be observed in hot  gas, vibrational lines can be observed in shocked ISM, and NIR lines can be observed in “cold” hot ISM.) Instead of observing hydrogen lines, trace species are used as proxies to infer hydrogen density. The choice of preferred trace species depends on their abundance. To quantify this, we define the critical density as

gas, vibrational lines can be observed in shocked ISM, and NIR lines can be observed in “cold” hot ISM.) Instead of observing hydrogen lines, trace species are used as proxies to infer hydrogen density. The choice of preferred trace species depends on their abundance. To quantify this, we define the critical density as

where A is the Einstein coefficient and  is the collision rate for a given transition. This of course will depend on temperature, as the collision rate is the product of the temperature-independent collisional cross section

is the collision rate for a given transition. This of course will depend on temperature, as the collision rate is the product of the temperature-independent collisional cross section  and the temperature-dependent particle velocity v. For a detailed explanation of critical density, see pp.~81–87 of Spitzer, and Chapter 19 of Draine.

and the temperature-dependent particle velocity v. For a detailed explanation of critical density, see pp.~81–87 of Spitzer, and Chapter 19 of Draine.

Typically assumed values are  ,

,  ,

,  ,

,  . The Einstein coefficient for the

. The Einstein coefficient for the  transition of CO is

transition of CO is  , yielding a critical density of

, yielding a critical density of  . Spitzer gives us fiducial values for critical densities around

. Spitzer gives us fiducial values for critical densities around  :

:

- CO,

,

,  mm,

mm,  ,

,

,

,

mm,

mm,  ,

,

- CS,

,

,  mm,

mm,  ,

,

- HCN,

,

,  mm,

mm,  ,

,

In practice, the following tracers are used for cold clouds in the range of  :

:

- Low density (n=10 cm^-3): 12CO

- Dark Cloud (n=300): 13CO, OH

- Dense Core (n=10^3): C18O, CS

- Dense Core (n=5 * 10^3): NH3, N2H+, CS

- Very Dense (n=10^8): OH Masers

- Very Very Dense (n=10^10): H20 masers

See handout on dense core multi-line half power contours from Myers 1991, and note that sizes do not proceed as critical densities would suggest.

As a reminder, electronic energy levels are much further apart, resulting in higher frequency transition lines. Vibrational levels are a few orders of magnitude more tightly spaced, then rotational energy levels are even closer. Here we give a brief overview of rotational line structure, see Chapter 5.1.5 of \cite{draine:2010} for more details.

Quantum mechanically, a diatomic molecule has energy levels identified by  with energy

with energy  (which would have

(which would have  instead in the quasiclassical theory). Here

instead in the quasiclassical theory). Here  is the moment of inertia,

is the moment of inertia,  is the distance between the atoms, and

is the distance between the atoms, and  is their reduced mass.

is their reduced mass.  is the rotation constant. The

is the rotation constant. The  index signifies that these values are valid for a given vibrational state only. Therefore the transition energy between the states J-1 and J is

index signifies that these values are valid for a given vibrational state only. Therefore the transition energy between the states J-1 and J is

In case of  O,

O,  in some mysterious units. For the

in some mysterious units. For the  transition,

transition,

, corresponding to

, corresponding to  , or

, or  . The

. The  and

and  transitions have twice and three times higher energy differences, respectively.

transitions have twice and three times higher energy differences, respectively.

We can estimate which transition gives maximum emission (in terms of number of photons) at a given temperature:

- The SMA and ALMA are sensitive to J=4-7

- KAO and Sophia are sensitive to J=20-40

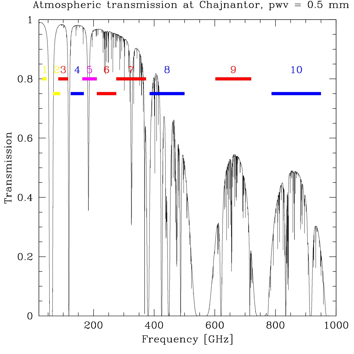

Note that  at 2.6 mm is more visible from Earth than

at 2.6 mm is more visible from Earth than  due to atmospheric extinction.

due to atmospheric extinction.

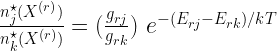

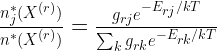

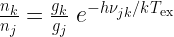

Collisional Excitation

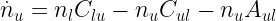

In LTE, the transition rates for a collisional  transition are

transition are

where  is the rate coefficent for the transition,

is the rate coefficent for the transition,  is the collisional cross section and v is the particle velocity.

is the collisional cross section and v is the particle velocity.

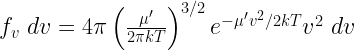

In case of Maxwellian velocity distribution, we have

where  is the reduced mass. For neutral-neutral state transitions,

is the reduced mass. For neutral-neutral state transitions,  is typically

is typically  , and for an ionizing transition,

, and for an ionizing transition,  .

.

In equilibrium, we have

where  is the critical density. When

is the critical density. When  , collisions dominate over spontaneous emission, resulting in

, collisions dominate over spontaneous emission, resulting in

However, in case of  , spontaneous emission dominates decay, so each collisionally excitation results in an emission:

, spontaneous emission dominates decay, so each collisionally excitation results in an emission:

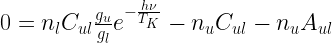

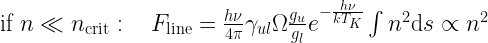

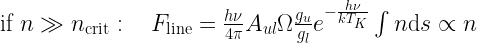

In case of optically thin emission,

where  is the apparent solid angle of the source. Then

is the apparent solid angle of the source. Then

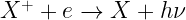

Recombination of Ions with Electrons

For more details, see Draine Chapter 14.

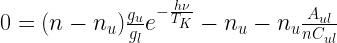

In HII regions, most recombination happens radiatively:  .

.

An electron with kinetic energy “E” can recombine to any level of hydrogen. The energy of the photon emitted is then given by  , where

, where  is the binding energy of quantum state nl.

is the binding energy of quantum state nl.

There are two extreme cases in recombination (see Baker and Menzel 1938):

- Case A: The medium is opcially thin to ionizing radiation. Appropriate in shock-heated regions (

) where density is very low.

) where density is very low.

- Case B: Optically thick to ionizing radiation. When a atom recombines to the n=1 state, the emitted lyman photon is immediately reabsorbed, so that recombinations to n=1 does not change the ionization state. This is called the “on the spot” approximation

Optical tracers of recombination:  and other lines

and other lines

Radio: Radio recombination lines (see Draine 10.7). Rydberg States are recombinations to very high (n>100) hydrogen energy levels. Spontaneous decay gives

![\nu_{n\alpha} = \frac{2n+1}{[n(n+1)]^2} \frac{I_H}{h} \sim 6.479 \big(\frac{100.5}{n+0.5}\big)^3 {\rm GHz}](https://s0.wp.com/latex.php?latex=%5Cnu_%7Bn%5Calpha%7D+%3D+%5Cfrac%7B2n%2B1%7D%7B%5Bn%28n%2B1%29%5D%5E2%7D+%5Cfrac%7BI_H%7D%7Bh%7D+%5Csim+6.479+%5Cbig%28%5Cfrac%7B100.5%7D%7Bn%2B0.5%7D%5Cbig%29%5E3+%7B%5Crm+GHz%7D&bg=FFFFFF&fg=000000&s=2&c=20201002)

A popular line is  . This is often observed because its proximity to the 1420 MHz line of HI.

. This is often observed because its proximity to the 1420 MHz line of HI.

Radio recombination lines often involve masing.

Star Formation in Molecular Clouds

Topics to be covered include:

- The Jeans Mass

- Free Fall Time

- Virial Theorem

- Instabilities

- Magnetic Fields

- Non-Isolated Systems

- “Turbulence”

An overview of the steps of star formation.

Basic properties of a GMC

Mass:

Lifetime: Uncertain. Probably 10 Myr (maybe as long as 100 Myr). “Lifetime” is not easily defined or inferred, since clouds are constantly fragmenting, exchanging mass with their surroundings, etc.

We roughly describe the hierarchy and fragmentation of clouds via the following terms:

- Clump. 10-100

. 1pc. The progenitor of stellar clusters

. 1pc. The progenitor of stellar clusters

- Core. 1-10

. 0.1 pc. The progenitor of individual stars, and small stellar systems.

. 0.1 pc. The progenitor of individual stars, and small stellar systems.

- Star. The end-product of fragmentation and collapse. 1

.

.

Note that, across these scales, density increases by tens of orders of magnitude:

\begin{figure}

\includegraphics[width=4in]{fig_030811_01}

\caption{Schematic of how a GMC fragments into clumps, cores, and stars}

\end{figure}

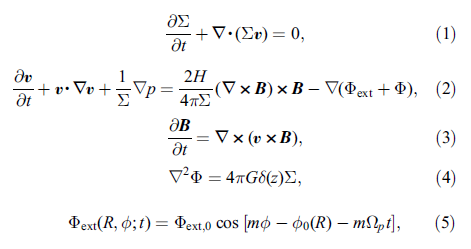

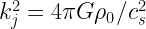

The Jeans Mass

For further details, see Draine ch 41.

Let’s analyze the stability of a sphere of gas, where thermal pressure is balanced by self-gravity.

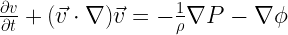

Start with the basic hydro equations (conservation of mass, momentum, and Poisson’s equation for the gravitational potential):

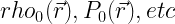

Consider an equilibrium solution  such that time derivatives are zero. Let’s perturb that solution slightly, and analyze when that perturbation grows unstably.

such that time derivatives are zero. Let’s perturb that solution slightly, and analyze when that perturbation grows unstably.

.

.

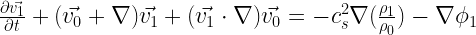

The linear hydro equations, to first order in the perturbations, are

Lets restrict our attention to an isothermal gas, so the equation of state is  , where

, where  is the isothermal sound speed. Then, the momentum becomes

is the isothermal sound speed. Then, the momentum becomes

Jeans took these equations and added:

- Uniform density to start with (

)

)

- Stationary gas (

)

)

- Gradient-free equilibrium potential

)

)

Then, the solution becomes (after taking  the momentum equation)

the momentum equation)

Now consider plane wave perturbations

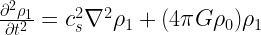

Define  , so

, so

is reall IFF

is reall IFF  . Otherwise,

. Otherwise,  is imaginary, and there is exponential growth of the instability. This then leads to a Jeans Length:

is imaginary, and there is exponential growth of the instability. This then leads to a Jeans Length:

Gas is “Jeans Unstable” when  . Exponentially growing perturbations will cause the gas to fragment into parcels of size

. Exponentially growing perturbations will cause the gas to fragment into parcels of size  .

.

Converting the Jeans length into a radius (assuming a sphere) yields

This defines a “preferred mass” for substructures within a cloud.

Let’s plug in values for a dense core:  . This yields

. This yields  . If we instead plug in numbers appropriate for the mean conditions in a GMC,

. If we instead plug in numbers appropriate for the mean conditions in a GMC,  , we get

, we get  .

.

Note that, once gravitaional collapse and heating set in, our isothermal sphere assumptions are no longer valid.

Collapse Timescale

For large scales, the growth time for the Jeans instability is

For  , this is about 0.7 Myr. Compared to a “free fall time” (collapse timescale for a pressure-less gas),

, this is about 0.7 Myr. Compared to a “free fall time” (collapse timescale for a pressure-less gas),

For  this is 1.4 Myr — slightly longer than growth time.

this is 1.4 Myr — slightly longer than growth time.

The Jeans Swindle

There is a sinister flaw in Jean’s analysis. Assuming  implies that

implies that  . The only way to satisfy this everywhere is for

. The only way to satisfy this everywhere is for  . However, more rigorous analysis verifies that Jean’s approach still yields approximately correct results.

. However, more rigorous analysis verifies that Jean’s approach still yields approximately correct results.

More Realistic Treatment

The Bonnor Ebert Sphere is the “largest mass an isothermal gas sphere can have in a \textbf{pressurized medium} while staying in hydrostatic equilibrium.

Numerical Star Formation Simulations

(Notes from Guest Lecture by Stella Offner)

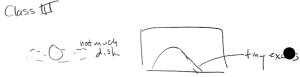

We start by writing down the conservation laws for mass, momentum and energy, each in the form of “rate of change of conserved quantity + flux = source density”:

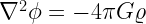

Also, the Poission equation for gravity is

The initial conditions are determined by T,  ,

,  and bulk

and bulk  , which further determine the total mass, total angular momentum, turbulence and other global properties.

, which further determine the total mass, total angular momentum, turbulence and other global properties.

Grid-based codes

One type of star formation simulations is grid-based. Many of these feature adaptive mash refinement, increasing spatial resolution in areas where parameters vary on smaller scales. Examples of such codes are Orion, Ramses, Athena, Zeus and Enzo.

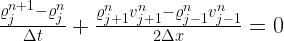

This algorithm stores  and

and  values at nodes indexed by j. The values at time step n+1 are calculated from those at time step n by discretized versions of the conservation equations. For instance, a discretized mass equation can be written as

values at nodes indexed by j. The values at time step n+1 are calculated from those at time step n by discretized versions of the conservation equations. For instance, a discretized mass equation can be written as

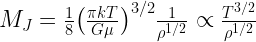

First, a homogeneous grid is created, with resolution satisfying the Truelove condition, also called as the Jeans condition:

where the empirical value for the Jeans number J is  . Then the simulation is carried on, repartitioning a square by adding nodes if deemed necessary based on the spatial variation of

. Then the simulation is carried on, repartitioning a square by adding nodes if deemed necessary based on the spatial variation of  or

or  . The timestep is global, but it can also be adaptively changed, as long as it satisfies the Courant condition

. The timestep is global, but it can also be adaptively changed, as long as it satisfies the Courant condition

ensuring that gas particles do not move more than half a cell in one time step.

Particle-Based Codes

The other family of codes is particle-based, also referred to as smooth particle hydrodynamics codes. Here individual pointlike particles are traced. Examples codes are Gadget, Gasoline and Hydra.

To solve the equations, density has to be smoothed with a smoothing length h that has to be larger than the characteristic distance between the particles. Usually we want approximately twenty particles within the smoothing radius. The formula for smoothing is

where an example of the smoothing kernel is

where  .

.

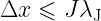

The Lagrangian of the system is

.

.

The Euler–Lagrange equations governing the motion of the particles are

It turns out that solving these equations leads to

,

,

where  is an viscosity term added artificially for more accurate modeling of transient behavior. The nature of the simulation requires that particles are stored in a data structure that facilitates easy search based on their position, for example a tree. As particles move, this structure needs to be updated.

is an viscosity term added artificially for more accurate modeling of transient behavior. The nature of the simulation requires that particles are stored in a data structure that facilitates easy search based on their position, for example a tree. As particles move, this structure needs to be updated.

The Jeans condition for these kind of simulations is that

where  is the minimum resolved fragmentation mass, equal to the typical total mass within smoothing length from any particle.

is the minimum resolved fragmentation mass, equal to the typical total mass within smoothing length from any particle.

Comparison

The advantages of the grid-based codes is that they are

- accurate for simulating shocks,

- more stable at instabilities.

Their disadvantages are that

- grid orientation may cause directional artifacts,

- grid imprinting might appear at the edges.

Also, they are more likely to break if there’s a bug in the code. On the other hand, particle-based codes have the advantages of being

- inherently Lagrangian,

- inherently Galilei invariant, that is, describing convection and flows well,

- accurate with gravity,

- good with general geometries.

At the same time, they suffer from

- resolution problems in low density regions,

- the need for an artificial viscosity term,

- the need for post processing to extract information on density and momentum distribution,

- statistical noise.

See slides for the effect of too large J for a grid-based simulation, too small h for a particle-based simulation, for examples involving shock fronts, Kelvin–Helmholtz instability and Rayleigh–Taylor instability, and star formation. Note that the simulated IMF matches theory and observations within an order of magnitude, but this is very sensitive to the initial temperature of the molecular cloud.

Some thoughts on Jeans scales

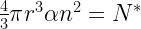

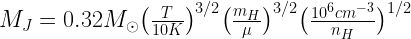

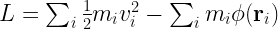

Recall the equation for the Jeans Mass:

In common units, this is

This raises a question — what jeans mass do we choose if a gas cloud is hierarchical, with many different densities and temperatures? This motivates the idea of turbulent fragmentation, wherein a collapsing cloud fragments at several scales as it collapses. We will return to this

Also recall that the Jeans growth timescale for structures much larger than the Jeans size is

for

Compare this to the pressureless freefall time:

From which we see

The Jeans growth time is about 1/2 the free-fall time

Finally, compare this to the crossing time in a region with  . The sound speed is

. The sound speed is  , which is 0.27 km/s for molecular hydrogen, and .08 km/s for 13CO.

, which is 0.27 km/s for molecular hydrogen, and .08 km/s for 13CO.

However, note that the observed linewidth in 13CO for such regions is of the order 1 km/s. Clearly, non-thermal energies dominate the motion of gas in the ISM.

Recall that the jeans length is

Plugging in the thermal H2 sound speed yields  . The crossing time for CO gas moving at 1 km/s is thus 1Myr in this region.

. The crossing time for CO gas moving at 1 km/s is thus 1Myr in this region.

So, in a cloud with  and

and  , the Jeans growth time, free fall time, and crossing time are all comparable.

, the Jeans growth time, free fall time, and crossing time are all comparable.

There is a problem with our derivation, though! We assumed the relevant sound speed that sets the Jeans length is the thermal hydrogen sound speed. However, these clouds are not supported thermally, as the non-thermal, turbulent velocity dispersion dominates the observed linewidths. This suggests we use and equivalent Jeans length where we replace the sound speed by the turbulent linewidth.

This is often done in the literature, but its sketchy. Using turbulent linewidths in a virial analysis assumes that turbulence acts like thermal motion — i.e., it provides an isotropic pressure. This may not be the case, as turbulent motions can be partially ordered in a way that provides little support against gravity.

The question of how a cloud behaves when it is not thermally supported leads to a discussion of Larsons Laws (JC 2013)

Larsons Legacy

see these slides

Stellar Winds in Star Forming Regions

Guest Lecture by Hector Arce. See this post for notes. See also this movie

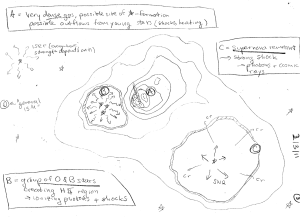

Introduction to shocks

Shocks occur when density perturbations are driven through a medium at a speed greater than the sound speed in that medium. When that happens, sound waves cannot propagate and dissipate these perturbations. Overdense material then piles up at a shock front

Collisions in the shock front will alter the density, temeprature, and pressure of the gas. The relevant size scale over which this happens (i.e., the size of the shock front) is the size scale over which particles communicate with each other via collisions:

. If

. If

A shock

An excellent reference offering a three-page summary of important shock basics (including jump conditions) is available in this copy of a handout from Jonathan Williams ISM class at the IfA in Hawaii.

Shock De-Jargonification

See this handout and this list of examples.

Shock(ing facts about) viscosity

A perfect shock is a discontinuity, but real shocks are smoothed out by viscosity. Viscosiity is the resistance of a fluid to deformation (it is the equivalent of friction in a fluid)

Inviscid or ideal fluids have zero viscosity. Colder gasses are less viscious, because collisions are less frequent.

Simulations include numerical viscosity by accident — these are the result of computational approximations / discretization that violate Eulers equations and lead to a momentum flow that acts like true viscosity. However, the behavior of numerical viscosity can be unrealistic and of inappropriate magnitude

True shocks are a few mean free paths thick, which is typically less than the resolution of simulations. Thus, simulations also add artificial viscosity to smooth out and resolve shock fronts.

Rankine Hugoniot Jump Conditions

This section is based on the 2013 Wikipedia entry for “Rankine-Hugoniot Conditions,” archived as a PDF here.

The Jump conditions describe how properties of a gas change on either side of a shock front. They are obtained by integrating the Euler Equations over the shock front. As a reminder, the mass, momentum, and energy equations in 1 dimension are

Where  is the fluid specific energy, and

is the fluid specific energy, and  is the internal (non-kinetic) specific energy.

is the internal (non-kinetic) specific energy.

We supplement these equations with an equation of state. For a adiabatic shocks (a bad term, but describes shocks where radiative losses are small), the equation of state is

Generally, what a jump condition is a condition that holds at a near-discontinuity. It ignores the details about how a quantity changes across the discontinuity, and instead describes the quantity on either side.

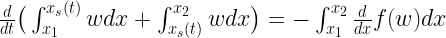

The general conservation law for some quantity w is

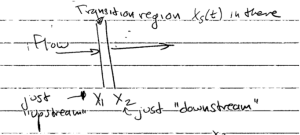

A shock is a jump in w at some point  . We call x1 the point just upstream of the shock, and x2 the point just downstream

. We call x1 the point just upstream of the shock, and x2 the point just downstream

A schematic defining the notation for the shock jump conditions

Integrating the conservation equation over the shock,

This holds because  in the frame moving with the shock

in the frame moving with the shock

Let  when

when

and in the limit

Where

The upstream and downstream speeds are constrained via

Moving away from the generic w formalism and to the Euler equations, we find

These are the Rankine Hugoniot conditions for the Euler Equations.

In going to a frame co-moving with the shock (i.e. v = S – u), one can show that

where c1 is the upstream sound speed

For a stationary shock, S = 0 and the 1D Euler equations become

After more substitution and defining  ,

,

For strong (adiabatic) shocks and an monatomic gas ( ),

),

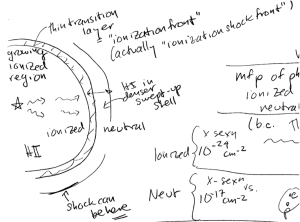

Introduction to Instabilities

See this handout

Rayleigh-Taylor Instability